In today’s AI market, you can find a variety of large language models (LLMs), coming in numerous forms (open-source and closed-source) and suggesting a number of different capabilities.

Some of those models are already significantly better than the others (e.g., ChatGPT, Gemini, Claude, Llama, and Mistral) because they are capable of solving numerous tasks more precisely and faster than others.

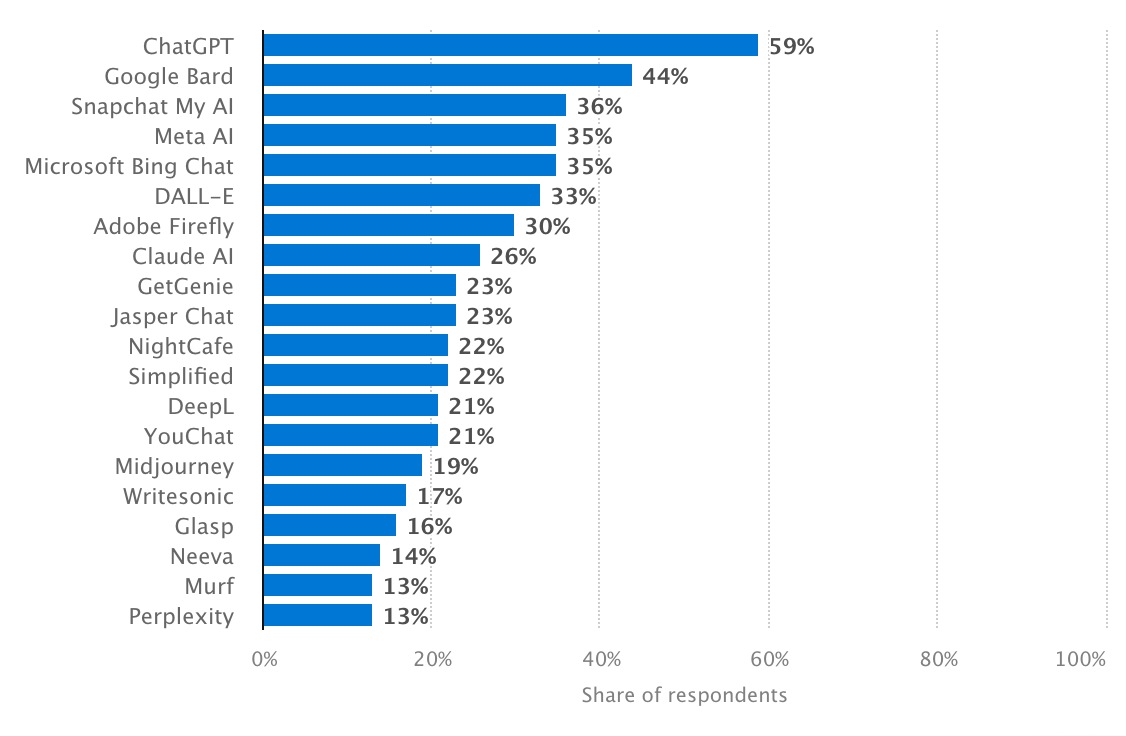

Most Popular AI Tools, Statista

But even these top-tier models, as powerful as they are, aren’t always a perfect fit out of the box. Most organizations soon find that broad, generic LLMs don’t pick up their industry terminology, in-house working methods, or brand voice. That’s where fine-tuning enters the picture.

What Is Fine-Tuning and Why It Matters in 2025

Fine-tuning refers to the practice of continuing training on a pre-trained LLM using a small, specialized dataset related to a chore, field, or organization.

Fine-tuning should be distinguished from training a model from scratch because it only involves making it learn a specific part or act with specific standards and intentions.

Why Pre-Trained Models Are Not Always Enough

Pre-trained language models are commonly made to cope with a wide variety of tasks (content creation, translation, summarization, question answering, etc.), but they sometimes gloss over the details.

Since these models learn from public internet data, they might misunderstand professional language, such as legal terms, financial statements, or medical records.

No, of course, their answers may sound fine, but for field-specific professionals, they can appear awkward, confusing, or inappropriate.

Fine-tuning helps fix this. For example, a hospital can fine-tune a model to understand medical terms and practitioners’ communication.

Or, a logistics company can train it to know the ins and outs of shipping and inventory. With fine-tuning, the model becomes more factual, uses the proper vocabulary, and fits a niche area.

Advantages of Fine-Tuning LLMs for Businesses

Tuning big language models helps business organisations get so much value out of AI by making it do things they want it to do.

First of all, fine-tuning makes a model speak your company’s language. Every business has its tone/style/manner — some are formal and technical, others are friendly and warm. Supervised fine-tuning makes the model catch your style and use your favored expressions.

Additionally, fine-tuning strongly improves accuracy in specialized areas. For instance, the OpenAI o1 model had scored the highest benchmark score of 94.8% for answering mathematics problems as of March 2024.

However, as a generic model, it might not fully understand legal phrases, medical wording, or economic statements.

But if a model is tuned with information intentionally from any industry, then it learns to process and respond to advanced or technical questions much better.

Privacy is another reason businesses opt to fine-tune. Instead of making sensitive information available to a third-party service, businesses can tweak and employ the model on their networks and thus keep information protected and have it adhere to data safety guidelines.

Finally, fine-tuning large language models can save money over time. Although it takes some time and effort at first, a fine-tuned model gets the job done more competently and faster.

It reduces errors, takes fewer tries, and can even be cheaper than making multiple calls to a paid API for a general model.

Top Fine-Tuning Methods in 2025

Fine-tuning in 2025 has become more accessible and easygoing than before. Organizations no longer need huge budgets or a lot of machine learning experience to refine a model for their use.

Now, there are a variety of well-tested approaches, from total retraining to light touch tuning, which enable organizations to select the optimum for their purposes, information, and infrastructure.

Full Fine-Tuning – The Most Effective Method

Full fine-tuning is defined by IBM as an approach that uses the pre-existing knowledge of the base model as a starting point to adjust the model according to a smaller, task-specific dataset.

The whole fine-tuning process changes the parameter weights of a model whose parameter weights have already been determined through prior training in order to fine-tune the model for a task.

LoRA and PEFT

If you want something faster and less expensive, LoRA (Low-Rank Adaptation) and PEFT (Parameter-Efficient Fine-Tuning) are smart choices.

These methods only adjust a portion of the model instead of the whole model. They work well even with less task-specific data and compute resources and are therefore the choice of startups and medium-sized companies.

Instruction Fine-Tuning

Another useful technique is fine-tuning for instructions. It allows the model to become more sensitive to how to perform instructions and give briefer, practical responses. It’s quite useful for AI assistants that are utilized to offer support, training, or advice.

RLHF (Reinforcement Learning from Human Feedback)

RLHF (Reinforcement Learning from Human Feedback) is intended for heavy use. It trains the model by exposing it to examples of good and poor answers and rewarding optimal responses.

RLHF is more progressive and complex, but perfect for producing high-quality, reliable AI such as law clerks or expert advisors.

Prompt-Tuning and Adapters

If you simply require an easy and fast way to adapt your model, you can use prompt tuning or adapters. These methods don’t touch the whole model. Instead, they utilize slight add-ons or clever prompts to guide the model’s behavior. They are fast, cheap, and easy to try out.

| Method | What It Does | Cost/Speed | Best For |

| Full Fine-Tuning | Trains the entire model on new data | High / Slow | Large-scale, high-performance needs |

| LoRA / PEFT | Tunes only select parameters | Low / Fast | Startups, resource-limited teams |

| Instruction Tuning | Improves response to user commands | Medium / Moderate | AI assistants, support bots |

| RLHF | Trains with human feedback and reward signals | High / Moderate | Expert-level, safe, reliable outputs |

| Prompt/Adapters | Adds small modules or prompts, no retraining | Very Low / Very Fast | Quick testing, cheap customization |

Top Fine-Tuning Methods in 2025 – At a Glance

What Do You Need to Fine-Tune a Large Language Model in 2025: Best Practices

Fine-tuning an LLM in 2025 is affordable than even for companies without an ML engineering team. However, to achieve accurate and reliable results, it is important to approach the process rightly.

The first step is to choose the type of model: open-source and closed-source. Open models (e.g., LLaMA, Mistral) allow more: you host them on your own servers, customize the model architecture, and manage the data.

Closed ones (like GPT or Claude) provide high power and quality, but work through APIs, i.e., full control is not available.

If data security and flexibility are critical for your company, open models are more preferable. If speed of launch and minimal technical barriers are important, it’s better to choose closed models.

Next, you need adequate data training, which means clean, well-organized examples from your field, such as emails, support chats, documents, or other texts your company works with.

The better your data, the smarter and more useful the model will be after fine-tuning. Without it, the model might sound nice, but it gets things wrong or misses the point.

Besides, you’ll also need the right tools and infrastructure. Some companies use AWS or Google Cloud platforms, while others host everything locally for extra privacy. For directing and watching the training process, you may use Hugging Face or Weights & Biases tools, and so on.

Of course, none of this works without the right people. Fine-tuning usually involves a machine learning engineer (to train the model), a DevOps expert (to set up and run the systems), and a domain expert or business analyst (to explain what the model should learn). If you don’t already have this kind of team, building one from scratch can be expensive and slow.

That’s why many companies now work with outsourcing partners, which specialize in AI custom software development. Outsourcing partners can take over the entire technical side, from selecting the model and preparing your data to training, testing, and deploying it.

Business Use Cases for Fine-Tuned LLMs

Fine-tuned models are not just smarter, they are more suitable for real-world business use cases. When you train a model on your company’s data, it takes over your sum and substance, which makes it generate valuable, accurate outputs, instead of bland answers.

AI Customer Support Agents

Instead of having a generic chatbot, you can build a support agent familiar with your services, products, and policies. It can respond as if it were a human agent trained, but with the correct tone and up-to-date information.

Personalized Virtual Assistants

A highly trained model can help with specific tasks such as processing orders, answering HR questions, prearranging interviews, or following shipments. These assistants learn from your internal documents and systems, so they know how things get done in your company.

Enterprise Knowledge Management

In large companies and enterprises, there are just too many papers, manuals, and corporate policies to remember.

An optimized LLM can read through all of them and give workers simple answers within seconds. It saves time and allows people to find information that they need without digging through files or PDFs.

Domain-Specific Copilots (Legal, Medical, E-commerce)

Specialized copilots, apart from other applications, can assist professionals with their daily work:

- Lawyers get help reviewing contracts or summarizing legal cases.

- Doctors can use the model to draft notes or understand patient history faster.

- E-commerce teams can quickly create product descriptions, update catalogs, or analyze customer reviews.

Case Study: Smart Travel Guide

One of the best examples of fine-tuning models is the Smart Travel Guide AI. It was fine-tuned to help travelers with personalized tips based on their likes, location, and local events. Instead of offering common tips, it makes customized routes and recommendations.

Challenges in Fine-Tuning LLMs

Generally, it is very useful to tune an LLM, but sometimes it comes with some obstacles.

The initial serious challenge is having enough data. You can only tune if you have lots of clean, structured, and valuable examples to train on.

If your dataset is unorganized, inadequate, or full of errors, the model might not learn what you actually require. To put it differently: if you feed it waste, you’ll get waste, no matter how advanced the model.

Then, of course, there is training and maintaining the model cost. These models use a tremendous amount of computer power, especially if you have a large one.

But the expense doesn’t stop after training. You will also need to test it, revise it, and get proof it works satisfactorily over the long term.

Another issue is overfitting. This is when the model learns your training data too perfectly, and nothing else. It can give great answers when it is being tested, but fall apart when someone asks it a new or even somewhat different question.

And equally important are legal and ethical factors. If your model gives advice, holds sensitive data, or makes decisions, you must be extra careful.

You must make sure it’s not biased, never produces harmful outputs, and adheres to privacy laws like GDPR or HIPAA.

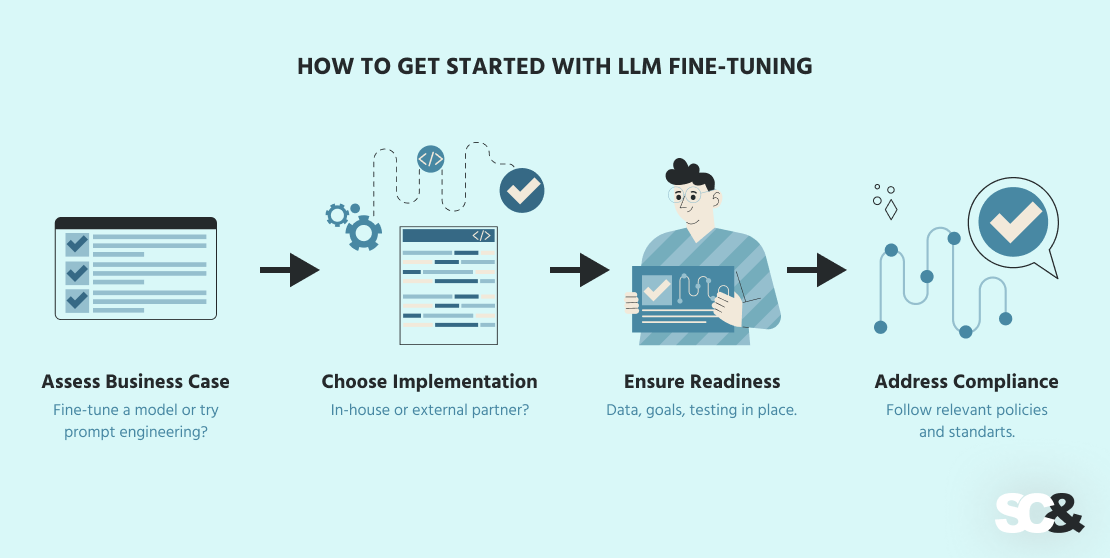

How to Get Started with LLM Fine-Tuning

If you think about fine-tuning, the good news is you don’t have to jump in blindly. With the right approach, it can be a painless and highly rewarding process.

The thing to do is to assess your business case. Ask yourself: Do you really need to fine-tune a model, or can prompt engineering (writing smarter, more detailed prompts) give you the results you want? For many simple tasks or domains, prompt engineering is cheaper and faster.

But if you’re dealing with industry-specific language, strict tone requirements, or private data, fine-tuning can offer a much better long-term solution.

Next, decide whether to run the project in-house or work with an external partner. Building your own AI team gives you full control, but it takes time, budget, and specialized talent.

On the other hand, an outsourcing partner, such as SCAND, can entirely take over the technical side. They can help you pick the right model, prepare your data, adjust it, deploy, and even help with prompt engineering.

Before getting started, make sure your company is ready. You’ll need enough clean data, clear goals for the model, and a way to test how well it works.

Finally, don’t forget about protection and compliance. If your model will work with confidential, legal, or medical data, it must adhere to all necessary policies.

How SCAND Can Help

If you don’t have the time or technical team to do it in-house, SCAND can take care of the entire process.

We’ll help you choose the right AI model for your business (open-source like LLaMA or Mistral, or closed-source like GPT or Claude). We’ll then clean and prep your data so it’s set and ready.

Then we do the rest: fine-tuning the model, deploying it in the cloud or on your servers, and watch model performance, proving that it communicates good and works well.

If you require additional protection, we also provide local hosting to secure your data and comply with laws or you can request LLM development services to get an AI model made exclusively for you.

Frequently Asked Questions (FAQs)

What exactly is fine-tuning an LLM?

Fine-tuning involves putting a pre-trained language model on your own data so that it acquires your specific industry, language, or brand voice in a better way.

Can I just leave a pre-trained model alone?

You can, but pre-trained models are generic and might not handle your niche topics or tone so well. Fine-tuning is what calibrating for precision and relevance for your special needs.

How much data is required to fine-tune a model?

That varies with your needs and model size. More high-quality, well-labeled data generally means better outcomes.

Is fine-tuning expensive?

It can be, especially for large models, and requires upkeep over time. But generally, it pays for itself in reduced reliance on costly API calls and an improved user experience.