Prompt Engineering Management System (PEMS): Optimizing Corporate AI Workflows

Since ChatGPT was presented in November 2022, AI and machine learning programs have been all the rage. While there was pre-existing AI-based software before OpenAI introduced ChatGPT, none of them earned as much public interest and hype as ChatGPT did.

ChatGPT’s capabilities (and its major competitors Claude and Gemini) spread like wildfire and now around one billion people use it for various tasks and purposes: creating content, writing code, debugging, and so on.

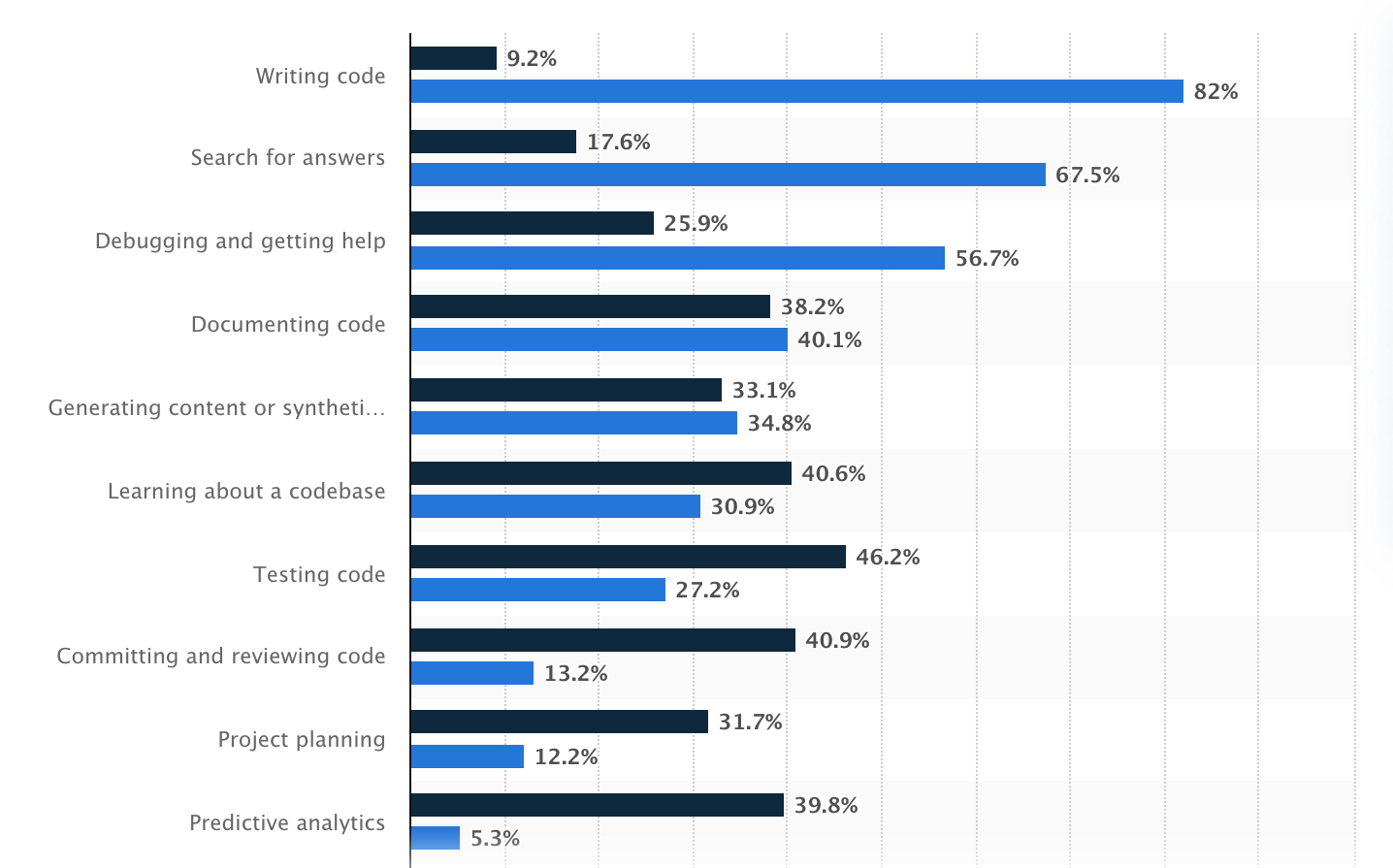

Most Popular Uses of Al, Statista

But as more companies start using tools like ChatGPT, Gemini, or local generative AI developments, it’s becoming clear that the quality and structure of prompts used matter no less than system training.

A small change in wording can mean the difference between a helpful response and a confusing one.

But writing good prompts is just the start. Businesses need a way to organize, test, and improve those prompts across teams and projects. One of the most valuable solutions is a Prompt Engineering Management System (PEMS).

What Is Prompt Engineering and Why It Matters for ChatGPT

According to McKinsey, prompt engineering is the practice of composing appropriate inputs (prompts) for Large Language Models (LLMs) to generate desired outputs. Put simply, AI prompts are questions given to the LLM to get a specific response. The better the prompt, the better the answer.

For generative AI that can digest and process massive and varied sets of unstructured data, this can include formatting, system instructions, context management, and output conditions.

For example, instead of just saying:

“Write a report”,

a well-engineered prompt might be:

“Write a 300-word report summarizing this week’s marketing results in a friendly, professional tone. Include key numbers and next steps.”

In business settings, prompts are not just casual questions—they are tactical means that govern the success of AI solutions, from customer service bots to internal automation platforms. A poorly prepared prompt can lead to:

- Incorrect or misleading responses

- Regulatory risks (e.g., GDPR violations)

- Failures and increased token usage

- Unpredictable behavior

On a broader level, prompt engineering helps ensure LLMs respond in line with company aims, tone, and policies.

Therefore, it’s no surprise that the global prompt engineering market size cost $222.1 million in 2023 and is expected to extend at a compound annual growth rate of 32.8% from 2024 to 2030.

Challenges of Prompt Management in Corporate Environments

How easy (or hard) do you think it is to use AI for practical results?Statista’s research, for example, states generative AI and effective prompt engineering are the areas of business that require the most AI skills.

Indeed, managing prompts in a commercial setting can easily turn into chaos. It appears simple at first glance: just write some instructions for an AI model and you’re done.

But as companies begin to apply AI in more departments and services, the number of prompts adds up. Without a structured system to cope with it, working with AI will become sloppy, haphazard, and hard to maintain.

One of the most obvious explanations for that is that prompts are often stored in random places: inside code, shared documents, or even on someone’s desktop.

When changes occur, there is typically no history of who changed them, what is changed, or why. Therefore, if something does break or the AI starts answering weird responses, it is difficult to identify what happened or how to fix it.

|

Challenge |

Summary |

| Scattered Storage | Prompts are saved in random places, making them hard to manage. |

| No Version Control | Changes aren’t tracked, making debugging difficult. |

| Inconsistent Tone | Teams write prompts in isolation, leading to mixed messaging. |

| Duplicate Efforts | Without a shared library, teams often reinvent the wheel. |

| No Testing Process | Prompts go live untested, risking poor AI output. |

| Security Risks | Prompts may expose sensitive data if not properly managed. |

Second, different teams often write their own prompts with their own tone and intent in mind. For instance, marketing normally uses friendly, accessible words, while legal teams use prudent and clear language.

Without shared directions or templates, the output can be very different across departments, which may lead to user confusion, inconsistency in brand voice, and even in some cases, legal or compliance problems.

Third, because there’s rarely a central prompt library or common working area, teams often don’t know what others are working on.

In other words, they may find themselves recreating analogous prompts from scratch, duplicating activities, or even working with slightly different prompts on the same task.

Fourth, in most cases, prompts are written and used right away, without being sufficiently tested. However, even slight variations in wording can have a tremendous impact on how the AI responds.

Without a system for probing or comparing prompt versions, companies risk applying prompts that don’t function well.

Lastly, there are consequential safety and privacy concerns. Prompts can include internal business logic, sensitive customer data, or information subject to strict regulations.

If these prompts are not stored appropriately or are accessed by too many users, they can lead to data leaks or violations of compliance.

What Is a Prompt Engineering Management System (PEMS)?

A prompt engineering management system is a tool for saving, trialing, and iterating AI prompts.

That is, PEMS represents a control panel for everything prompt-related: instead of having inputs here and there in code files, documents, or grids, departments can bring them into one portal (repository) to:

- Write and edit

- Classify and label by favored categories

- Watch amendments

- Probe prompts in/with real systems

- Work with others

In short, PEMS treats prompts like any other company assets — just like code or designs. It guarantees that AI model inputs are high-quality, uniform, and prepared for corporate use.

Key Features of a Prompt Management System

Where better ingredients can make a meal better, good input into a generative AI model can make the output better. A well-written PEMS makes it easier to use AI prompts more skillfully, safely, and sensibly, but the following components are necessary for it to work well:

- Centralized Repository: PEMS houses all templates in one location, which makes it easy for all the team members to find, rework, and contribute to prompts without having to search for them in different files or systems.

- Version Control: PEMS tracks every change made to a prompt. You can see who changed it, when, and why. If something goes wrong, you can go back to an earlier version to fix it. This helps keep prompts working properly over time.

- Standardized Templates: PEMS provides templates and best practices for prompt engineering to guarantee all commands are written in a similar format and style.

- Probing and Validation: PEMS enables departments to pre-test templates before using them. Simply put, they can experiment with whether the AI produces correct answers and detect faults before they impact users.

- Integration of Feedback: Whenever users notice that a command contains problems, they can add feedback to the system for further adjustment.

- Access Control: PEMS regulates who can draw up, alter, or read prompts to maintain the confidentiality of commercial information and confirm that only accepted users make changes.

- Collaboration Tools: PEMS allows teams to work together: share prompts, suggest improvements, and prove everything goes in line in the entire company.

How PEMS Streamlines Prompt Quality and Consistency

Utilizing AI in business works only if the instructions we give to the AI are succinct, unambiguous, and accurately written. A prompt engineering management system makes that possible.

Instead of writing prompts elsewhere in different formats, PEMS allows staff to have one system to write and manage them all. Thus, all your members are able to work with the same configuration and style, which makes the AI answer more objectively, honestly, and professionally.

PEMS also makes it simple to test prompts before use. Similar to desktop software or mobile apps, prompts can be tried to see how the AI reacts. If something is not right or the AI application gives the wrong answers, the system will catch it right away.

Version control is another helpful feature. With PEMS, teams can watch modifications to the prompt text over time. You’ll know who altered it, when, and why.

In case a new version of a query causes an issue, you can switch back to a previous version.

PEMS also allows teams to work together. Having all the prompts stored in one spot helps share and reuse others’ work. A prompt designed by the HR team, for example, can be tweaked by the legal team or accounting department.

Finally, PEMS is open to feedback. When users have difficulties with queries, that feedback can be instantly input into the system for revision retests.

Use Cases for PEMS in Enterprise AI Workflows

If everything is now clear with the definition and fundamental components of PEMS, now is the time to analyze the basic applications of it:

1. Customer Support Chatbots

Many businesses employ AI chatbots to respond to customer inquiries. With PEMS, teams can control and refine the prompts that instruct the chatbot on what to say. That helps in making responses more helpful, friendly, and on-brand even when the chatbot answers a thousand different questions.

2. Internal Knowledge Assistants

Some companies use AI software to aid workers in locating information faster. For example, an HR aide can answer questions about vacation time, or a contract law assistant can define the terms of a contract. PEMS maintains all the stimuli behind these instruments as correct, concise, and up-to-date.

3. Content Creation and Marketing

AI can be widely employed by marketing teams to draft emails, ads, product descriptions, etc. PEMS allows them to save and refine prompts in accordance with the brand voice and messaging guidelines so that AI stays on-brand irrespective of who employs it.

4. Code Generation and Developer Tools

Using AI, developers can develop code, generate documentation, or debug. Through PEMS, developers can tackle prompts that generate consistent results between several tools and programming languages without having to rewrite them.

5. Data Analysis and Report Generation

AI may be utilized to convert raw data into meaningful reports or summaries. PEMS maintains the prompts used to drive this process in line with business goals and produce uniform output irrespective of the shifting data.

6. Training and Onboarding

AI systems can help train new staff by answering queries or helping them navigate through procedures. PEMS guarantees that such queries stay updated and correct, and new employees always obtain accurate information as a result.

How SCAND Can Help You Build and Deploy a Custom PEMS

At SCAND, we understand that every company has its own way of working with AI. Therefore, we don’t offer a one-size-fits-all solution. Instead, we offer AI development services so that any company could get a custom PEMS aligned with the specific conditions, tools, and workflows.

We start by figuring out how your teams are already using AI: for chatbots, content generation, data analysis, or helping developers write code.

We then assemble a system that brings all your prompt templates together into one hub with the features you need most, such as version control, testing, and protected access.

Our development team has rich experience with AI integrations, enterprise software solutions, and workflow automation. So whether you need to integrate your PEMS into internal applications, cloud platforms, or third-party tools, we can do that.

We also focus on making your system easy to use. You won’t have to be an AI developer to write or edit prompts. We can build a clean, user-friendly interface so that anyone—your marketing teams, support staff, legal, HR—can manage prompts without ever writing a line of code.

Finally, we can help you scale your system when you expand your use of AI. Whether you’re starting out small or are working with hundreds of prompts between teams, we’ll have your custom PEMS ready to grow with you.