What Is an NLP Chatbot and How Natural Language Processing Chatbots Works

Have you ever wondered why a bot on a website seems to understand you, even if you misspell or write informally? It’s due to NLP — Natural Language Processing.

It is a smart algorithm that “reads” your text almost like a human being: it recognizes the meaning, determines your intentions, and selects an appropriate response. It uses linguistics, machine learning, and current language models like GPT all at the same time.

Introduction to NLP Chatbots

Today’s users don’t want to wait — they expect clear, instant answers without unnecessary clicks. That’s exactly what NLP chatbots are built for: they understand human language, process natural-language queries, and instantly deliver the information users are looking for.

They connect with CRMs, recognize emotions, understand context, and learn from every interaction. That’s why they are now essential for modern AI-powered customer service, which includes everything from online shopping to digital banking and health care support.

More and more companies are using chatbots for the first point of contact with customers — a moment that needs to be as clear, helpful, and trustworthy as possible.

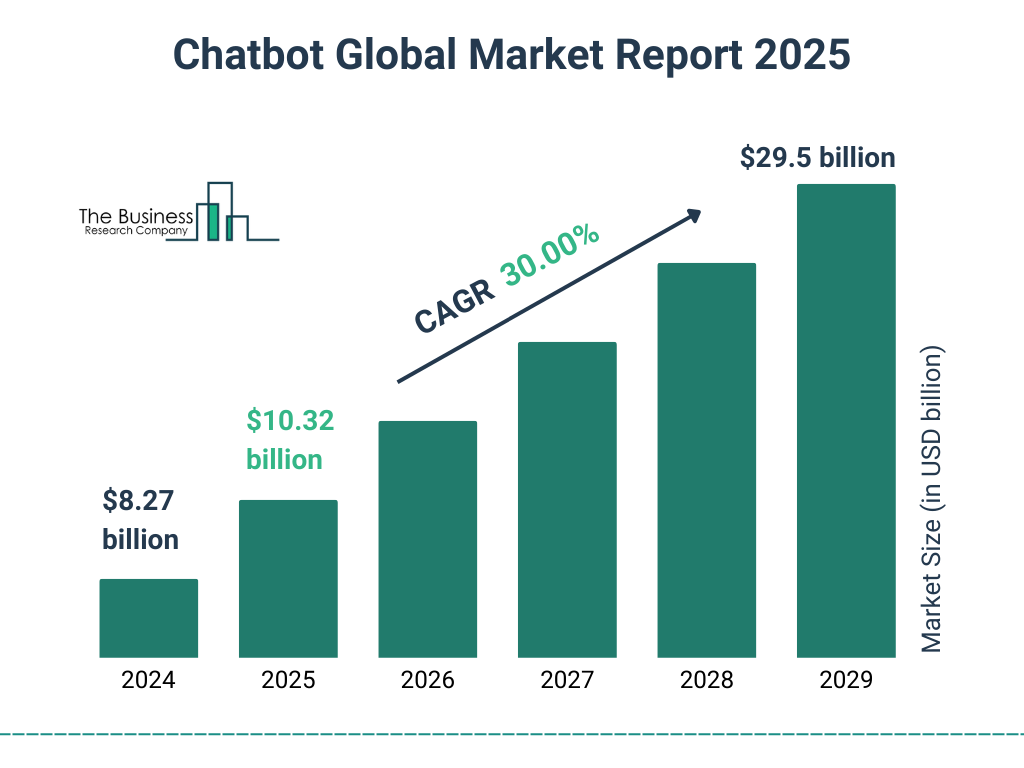

The Business Research Company published a report that demonstrates how quickly the chatbot business is developing. The market, valued at $10.32 billion in 2025, is forecast to expand to $29.5 billion by 2029, maintaining a strong compound annual growth rate of roughly 30%.

Chatbot market 2025, The Business Research Company

What Is Natural Language Processing (NLP)?

Natural Language Processing (NLP) helps computers work with human language. It’s not just about reading words. It’s about getting the meaning behind them — what someone is trying to say, what they want, and sometimes even how they feel.

NLP is used in almost all applications:

- Modern word processors can predict and suggest the ending.

- You say to your voice assistant, “Play something relaxing”, and it understands your wishes — it interprets context.

- A customer reports in a chat, “Where’s my order?” or “My package hasn’t shown up” — the bot understands there is a delivery question and appropriately responds.

- Google hasn’t searched on keywords in years — it understands your query with contextual meaning, even when your query is vague, for example, “the movie where the guy loses his memory.”

How an NLP Chatbot Works: Step-by-Step Workflow

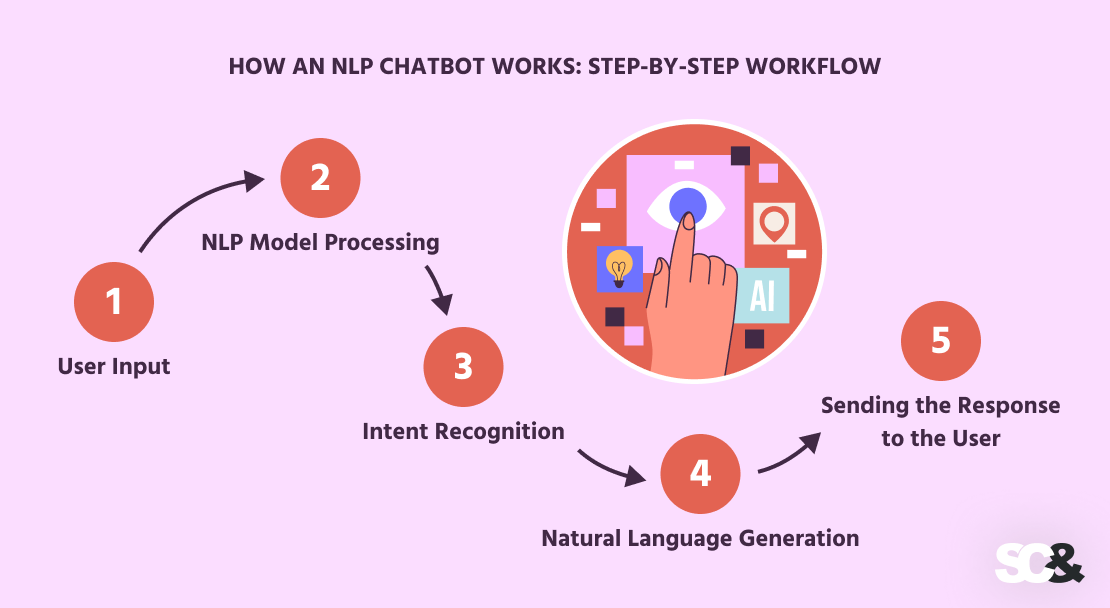

Creating a dialog with an NLP chatbot is not just a question-and-answer exercise. There’s a chain of operations going on inside that turns human speech into a meaningful bot response. Here’s how it works step by step:

1. User Input

The user enters a message in the chat, for example: “I want to cancel my order.”

- Free text with typos or slang

- A question in unstructured form

- A command phrased in different ways: “Please cancel the order,” “Cancel the purchase,” etc.

2. NLP Model Processing

The bot analyzes the message using NLP components:

- Tokenization — splitting into words and phrases

- Lemmatization — converting words to their base form

- Syntax analysis — identifying parts of speech and structure

- Named Entity Recognition (NER) — extracting key data (e.g., order number, date)

NLP helps to understand: “cancel” — is an action, “order” — is the object.

3. Intent Recognition

The chatbot determines what the user wants. In this case, the intent is order cancellation.

Additionally, it analyzes:

- Emotional tone (irritation, urgency)

- Conversation history (context)

- Clarifying questions (if information is insufficient)

4. Natural Language Generation

Based on the intent and data, the bot generates a meaningful and clear response. This could be:

- A static template-based reply

- A dynamically generated text via the NLG module

- Integration with CRM/API (e.g., retrieving order status)

Example response:

“Got it! I’ve canceled order №12345. The refund will be processed within 3 business days.”

5. Sending the Response to the User

The final step — the bot sends the ready response to the interface, where the user can:

- Continue the conversation

- Confirm/cancel the action

- Proceed to the next question

NLP Chatbots vs. Rule-Based Chatbots: Key Differences

When developing a chatbot, it is important to choose the right approach — it depends on how useful, flexible, and adaptable it will be in real-life scenarios. All chatbots can be divided into two types: rule-based and NLP-oriented.

The first one works according to predefined rules, while the second one uses natural language processing and machine learning. Below is a comparison of the key differences between these approaches:

| Aspect | Rule-Based Chatbots | NLP Chatbots |

| How they work | Use fixed rules — “if this, then that.” | Use an AI agent to figure out what the user really means. |

| Conversation style | Follow strict commands. | Can handle different ways of asking the same thing. |

| Language skills | Don’t actually “understand” — they just match keywords. | Understand the message as a whole, not just the words. |

| Learning ability | They don’t learn — once set up, that’s how they stay. | Get smarter over time by learning from new interactions. |

| Context awareness | Don’t keep track of previous messages. | Remember the flow of the conversation and respond accordingly. |

| Setup | Easy to build and launch quickly. | Takes longer to develop but offers more depth and flexibility. |

| Example request | “1 — cancel order” | “I’d like to cancel my order — I don’t need it anymore.” |

Key Differences Between Rule-Based and NLP Chatbots

Strengths and Limitations

Both rule-based and NLP chatbots have their pros and cons. The best option depends on what you’re building, your budget, and what kind of customer experience your users expect. Here’s a closer look at what each type brings to the table — and where things can get tricky.

Advantages of Rule-Based Chatbots:

- Easy to build and manage

- Reliable for handling standard, predictable flows

- Works well for FAQs and menu-based navigation

Limitations of Rule-Based Chatbots:

- Struggle with unusual or unexpected queries

- Can’t process natural language

- Lack of understanding of context and user intent

Advantages of NLP Chatbots:

- Understand free-form text and different ways of phrasing

- Can recognize intent, emotions, even typos and errors

- Support natural conversations and remember context

- Learn and improve over time

Limitations of NLP Chatbots:

- More complex to develop and test

- Require high-quality training data

- May give suboptimal answers if not trained well

When to Use Each Type

There’s no one-size-fits-all solution when it comes to chatbots. The best choice really depends on what you need the bot to do. For simple, well-defined tasks, a basic rule-based bot might be all you need. But if you’re dealing with more open-ended conversations or want the bot to understand natural language and context, an NLP-based solution makes a lot more sense.

Here’s a quick comparison to help you figure out which type of chatbot fits different use cases:

| Use Case | Recommended Chatbot Type | Why |

| Simple navigation (menus, buttons) | Rule-Based | Doesn’t require language understanding, easy to implement |

| Frequently Asked Questions (FAQ) | Rule-Based or Hybrid | Scenarios can be predefined in advance |

| Support with a wide range of queries | NLP Chatbot | Requires flexibility and context awareness |

| E-commerce (order help, returns) | NLP Chatbot | Users phrase requests differently, personalization is important |

| Temporary campaigns, promo offers | Rule-Based | Quick setup, limited and specific flows |

| Voice assistants, voice input | NLP Chatbot | Needs to understand natural speech |

Chatbot Use Cases and Best-Fit Technologies

Machine Learning and Training Data

Machine learning is what makes smart NLP chatbots truly intelligent. Unlike bots that stick to rigid scripts, a trainable model can actually understand what people mean — no matter how they phrase it — and adapt to the way real users talk.

At the core is training on large datasets made up of real conversations. These are called training data. Each user message in the dataset is labeled — what the user wants (intent), what information the message contains (entities), and what the correct response should be.

For example, the bot learns that “I want to cancel my order,” “Please cancel my order,” and “I no longer need the item” all express the same intent — even though the wording is different. The more examples it sees, the more accurately the model performs.

But it’s not just about collecting user messages. Data needs to be structured: intent detection, entity extraction (order numbers, addresses, dates), error frequency identification, and describing phrasing alternatives. Analysts, linguists, and data scientists work together to do this.

But it’s not just about piling up chat logs. To teach a chatbot well, that data needs to be cleaned up and organized. It means figuring out what the user actually wants (the intent), picking out key details like names or dates, noticing common typos or quirks, and understanding all the different ways people might say the same thing.

It’s a team effort — analysts, linguists, and data scientists all play a part in making sure the bot really gets how people talk.

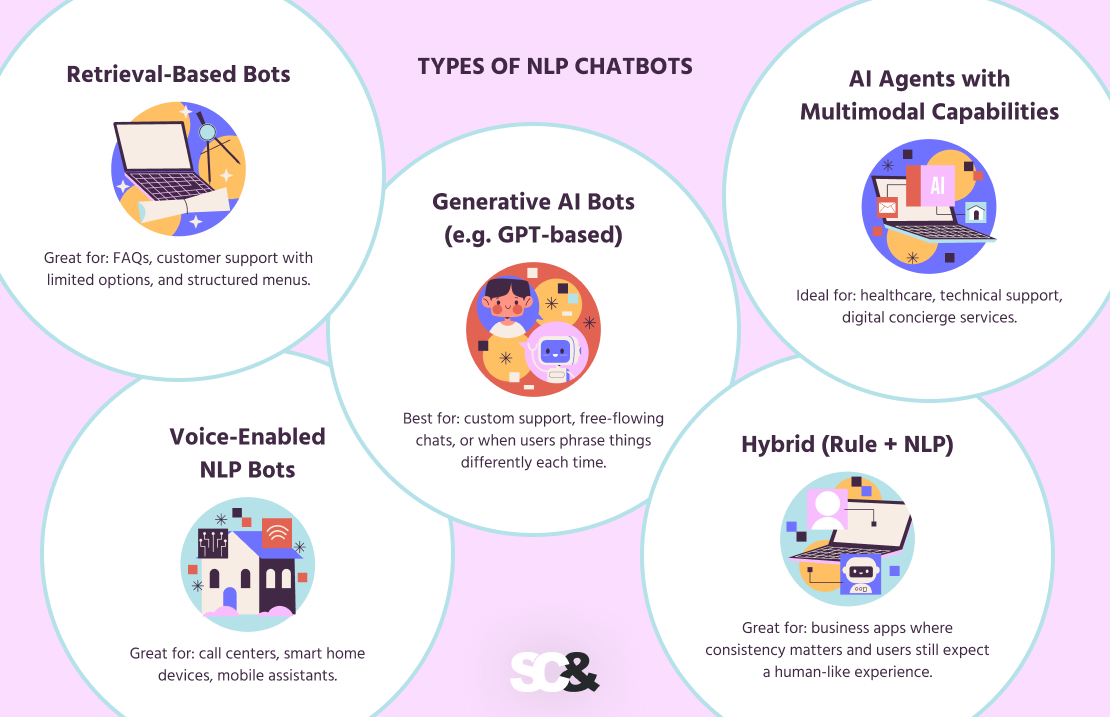

Types of NLP Chatbots

Not all chatbots are built the same. Some follow simple rules, others feel almost like real people. And depending on what your business needs — fast answers, deep conversations, or even voice and image support — there’s a type of chatbot that fits just right. Here’s a quick guide to the most common kinds you’ll come across in 2025:

Retrieval-Based Bots

These bots are like smart librarians. They don’t invent anything — they just pick the best response from a list of answers you’ve already given them. If someone asks a question that’s been asked before, they give an instant reply. Great for: FAQs, customer support with limited options, and structured menus.

Generative AI Bots (e.g. GPT-based)

These are the ones that can truly converse. They do not simply reply with pre-determined responses — they create their own based on your input. They perform the best for non-linear conversations, have higher conversation style matches, and can match virtually any tone, style, and humor.

Best for: custom support, anything with free-flowing conversations, or situations where users can pretty much never say things the same way twice.

AI Agents with Multimodal Capabilities

These machines can do more than just read text. You can chat with them, send an email, or add a document, and they know how to deal with it. Think of them as digital assistants with superpowers: they can “see,” “hear,” and “understand” simultaneously. Ideal for: healthcare, technical support, digital concierge services.

Voice-Enabled NLP Bots

These are the bots that you speak to — and they speak back. They use speech-to-text to understand your voice and text-to-speech to reply. Perfect when you’re on the go, multitasking, or just prefer talking over typing. Great for: call centers, smart home devices, mobile assistants.

Hybrid (Rule + NLP)

Why choose between simple and smart? Hybrid bots mix rule-based logic for easy tasks (like “press 1 to cancel”) with NLP to handle more natural, complex messages.

They’re flexible, scalable, and reliable — all at once. Great for: business apps where consistency matters and users still expect a human-like experience.

Build an NLP Chatbot: Chatbot Use Cases

Creating an NLP chatbot is a process that combines business logic, linguistic analysis, and technical implementation. Here are the key stages of development:

Define Use Cases and Intent Structure

The first step is to determine why you need a chatbot and what tasks it will perform. It can be requests, customer support, booking, answers to frequent questions, etc.

After that, the structure of intents is formed, i.e., a list of user intentions (for example, “check order status”, “cancel subscription”, “ask a question about delivery”). Each intent should be clearly described and covered with examples of phrases with which users will express it.

Choose NLP Engines (ChatGPT, Dialogflow, Rasa, etc.)

The next step is to choose a natural language processing platform or engine. It can be:

- Dialogflow — a popular solution from Google with a user-friendly visual interface

- Rasa — open-source framework with local deployment and flexible customization

- ChatGPT API — powerful LLMs from OpenAI suitable for complex and flexible dialogs

- Amazon Lex, Microsoft LUIS, IBM Watson Assistant — enterprise platforms with deep integration

The choice depends on the level of control, privacy requirements, and integration with other systems.

Train with Sample Dialogues and Feedback Loops

After selecting a platform, the bot is trained on the basis of dialog examples. It is important to collect as many variants as possible of phrases that users use to express the same intentions.

The above is also recommended to provide a process of feedback and refresher training. The system should “learn” from new data: improve recognition accuracy and natural language understanding, take into account typical errors, and update the entity dictionary.

Integrate with Frontend (Web, Mobile, Voice)

The next stage is to integrate the chatbot with user channels: website, mobile app, messenger, or voice assistant. The interface should be intuitive and easily adaptable to different devices.

It is also important to provide for fast data exchange with backend systems — CRM, databases, payment systems, and other external services.

Add Fallbacks and Human Handoff Logic

Even the smartest bot will not be able to process 100% of requests. Therefore, it is necessary to implement fallback mechanics: if the bot does not understand the user, it will ask again, offer options, or pass the dialog to an operator.

Human handoff (handoff to a live employee) is a critical element for complex or sensitive situations. It increases trust in the system and helps avoid a negative user experience.

Tools and Technologies for NLP Chatbots

These days, chatbots can carry on real conversations, guide people through tasks, and make things feel smooth and natural. What makes that possible? Thoughtfully chosen tools that help teams build chatbots users can actually rely on — clear, helpful, and easy to talk to.

To make it easier to choose the right platform, here’s a comparison table highlighting key features:

| Platform | Access Type | Customization Level | Language Support | Integrations | Best For |

| OpenAI / GPT-4 | Cloud (API) | Medium | Multilingual | Via API | AI assistants, text generation |

| Google Dialogflow | Cloud | Medium | Multilingual | Google Cloud, messaging platforms | Rapid development of conversational bots |

| Rasa | On-prem / Cloud | High | Multilingual | REST API | Custom on-premise solutions |

| Microsoft Bot Framework | Cloud | High (via code) | Multilingual | Azure, Teams, Skype, others | Enterprise-level chatbot applications |

| AWS Lex | Cloud | Medium | Limited | AWS Lambda, DynamoDB | Voice and text bots within the AWS ecosystem |

| IBM Watson Assistant | Cloud | Medium | Multilingual | IBM Cloud, CRM, external APIs | Business analytics and customer support |

Comparison of Leading NLP Chatbot Development Platforms

Best Practices for NLP Chatbot Development

Creating an efficient NLP chatbot not only relies on the quality of the model but also on how the model is trained, tested, and improved. The following are core practices that will allow you to make the bot highly accurate, useful, and sustainable in the real world.

Keep Training Data Updated

Regularly updated training data helps the chatbot adapt to changes in user behavior and language patterns. Up-to-date data increases the accuracy of intent recognition and minimizes errors in query processing.

Use Clear Intent Definitions

Well-defined purpose definitions remove ambiguity, overlap, and conflicts between contexts. An organization model of intents better handles query understanding and propels bot response time.

Monitor Conversations for Edge Cases

Analysis of real dialogs allows you to identify non-standard cases that the bot fails to handle. Identifying such “corner” scenarios helps to quickly make adjustments and increase the stability of dialog logic.

Combine Rule-Based Chatbot Logic for Safety

A chatbot that mixes NLP with some well-placed rules is much better at staying on track. In tricky or important situations, it can help avoid mistakes and stick to your business logic without going off course.

Test with Real Users

Testing with live audiences reveals weaknesses that cannot be modeled in an isolated environment. Feedback from users helps to better understand expectations and behavior, which helps to improve the user experience.

Track Metrics (Fallback Rate, CSAT, Resolution Time)

Keeping an eye on metrics like fallback rate, customer satisfaction, and how long it takes to resolve queries helps you see how well your chatbot is doing — and where there’s room to improve.

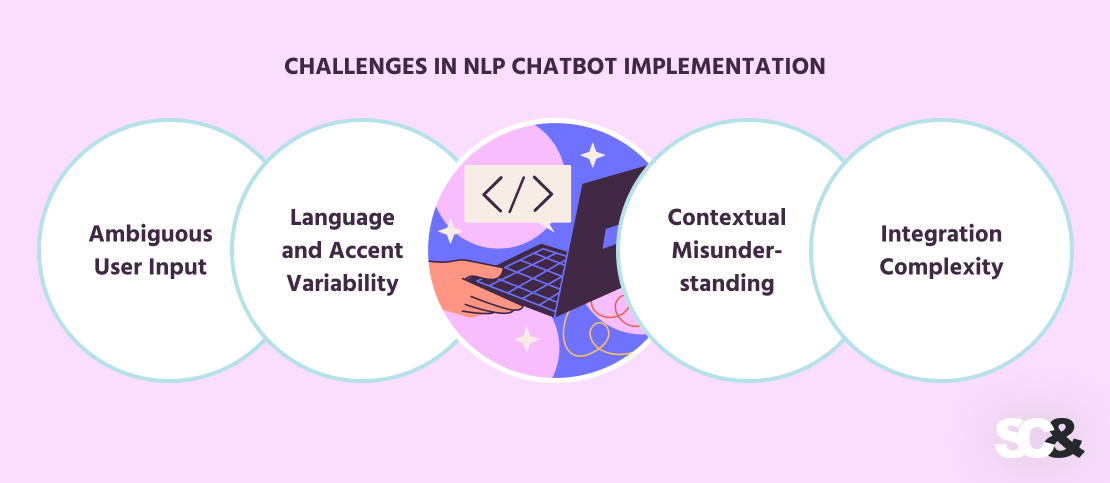

Challenges in NLP Chatbot Implementation

Even though modern NLP chatbots are incredibly capable, bringing them into real-world use comes with its own set of challenges. Knowing about these hurdles ahead of time can help you plan better and build a chatbot that’s more reliable and effective.

Ambiguous User Input

People don’t always say things clearly. Messages can be vague, carry double meanings, or lack context. That makes it harder for the chatbot to understand the user’s intent and can lead to wrong replies. To reduce this risk, it’s important to include clarifying questions and have a well-thought-out fallback strategy.

Language and Accent Variability

A chatbot needs to recognize different languages, dialects, and accents, especially when voice input is involved. If the system isn’t trained well enough on these variations, it can misinterpret what’s being said and break the user experience.

Contextual Misunderstanding

Long or complex conversations can be tricky. If a user changes the topic or uses pronouns like “it” or “that,” the chatbot might lose track of what’s being discussed. This can lead to awkward or irrelevant replies. To avoid this, it’s crucial to implement context tracking and session memory.

Integration Complexity

Connecting a chatbot to tools like CRMs, databases, or APIs often requires extra development work and careful attention to data security, permissions, and sync processes. Without proper integration, the bot won’t be able to perform useful tasks in real business scenarios.

At SCAND, we don’t just build software — we build long-term technology partnerships. With over 20 years of experience and deep roots in AI, deep learning, and natural language processing, we design chatbots that do more than answer questions — they understand your users, support your teams, and improve customer experiences. Whether you’re just starting out or scaling fast, we’re the AI chatbot development company that can help you turn automation into real business value. Let’s create something your customers will love.

Frequently Asked Questions (FAQs)

What is the difference between NLP and an AI chatbot?

Think of conversational AI (Artificial Intelligence) as the big umbrella — it covers all kinds of smart technologies that try to mimic human thinking. NLP (Natural Language Processing) is one specific part of AI that focuses on how machines understand and work with human language, whether it’s written or spoken. So, while all NLP is AI, not all AI is NLP.

Are NLP chatbots the same as LLMs?

Not exactly, though they’re closely related. LLMs (Large Language Models), like GPT, are the engine behind many advanced NLP chatbots. An NLP chatbot might be powered by an LLM, which helps it generate replies, understand complex messages, and even match your tone. But not all NLP bots use LLMs. Some stick to simpler models focused on specific tasks. So it’s more like: some NLP chatbots are built using LLMs, but not all.

How do NLP bots learn from users?

They learn the way people do from experience. Every time users interact with a chatbot, the system can collect feedback: Did the bot understand the request? Was the reply helpful? Over time, developers (and sometimes the bots themselves) analyze these patterns, retrain the model with real examples, and fine-tune it to make future conversations smoother. It's kind of a feedback loop — the more you talk to it, the smarter it gets (assuming it's set up to learn, of course).

Is NLP only for text, or also for voice?

It’s not limited to text at all. NLP can absolutely work with voice input, too. In fact, many smart assistants — like Alexa or Siri — use NLP to understand what you're saying and figure out how to respond. The process usually includes speech recognition first (turning your voice into text), then NLP kicks in to interpret the message. So yes — NLP works just fine with voice, and it’s a big part of modern voice tech.

How much does it cost to build an NLP chatbot?

If you're building a basic chatbot using an off-the-shelf platform, the cost can be fairly low, especially if you handle setup in-house. But if you're going for a custom, AI-powered assistant that understands natural language, remembers past conversations, and integrates with your tools, you should be prepared for a bigger investment. Costs vary based on complexity, training data, integrations, and ongoing support.