AI Development in Logistics: Analysis of Cargo Transportation Messages

- LLM

- OpenAI (ChatGPT 3.5/4)

- GenAI

- ChatGPT Development

- Anthropic Clause 3 Opus/Sonnet/Hoku

- Llama 3 8B

- Python

- Redis

- PostgreSQL

Challenge

There were several challenges in the project:

- Extracting key data from diverse message formats

- Handling high message volumes while maintaining processing efficiency

- Cost-effective usage of LLM services

Approach

Our approach involved:

- Creating a diverse message test base

- Developing optimal data extraction prompts

- Implementing a queue-based prototype solution

- Testing and optimizing LLM performance

- Balancing performance and budget considerations

- Deploying the production version

Solution Overview

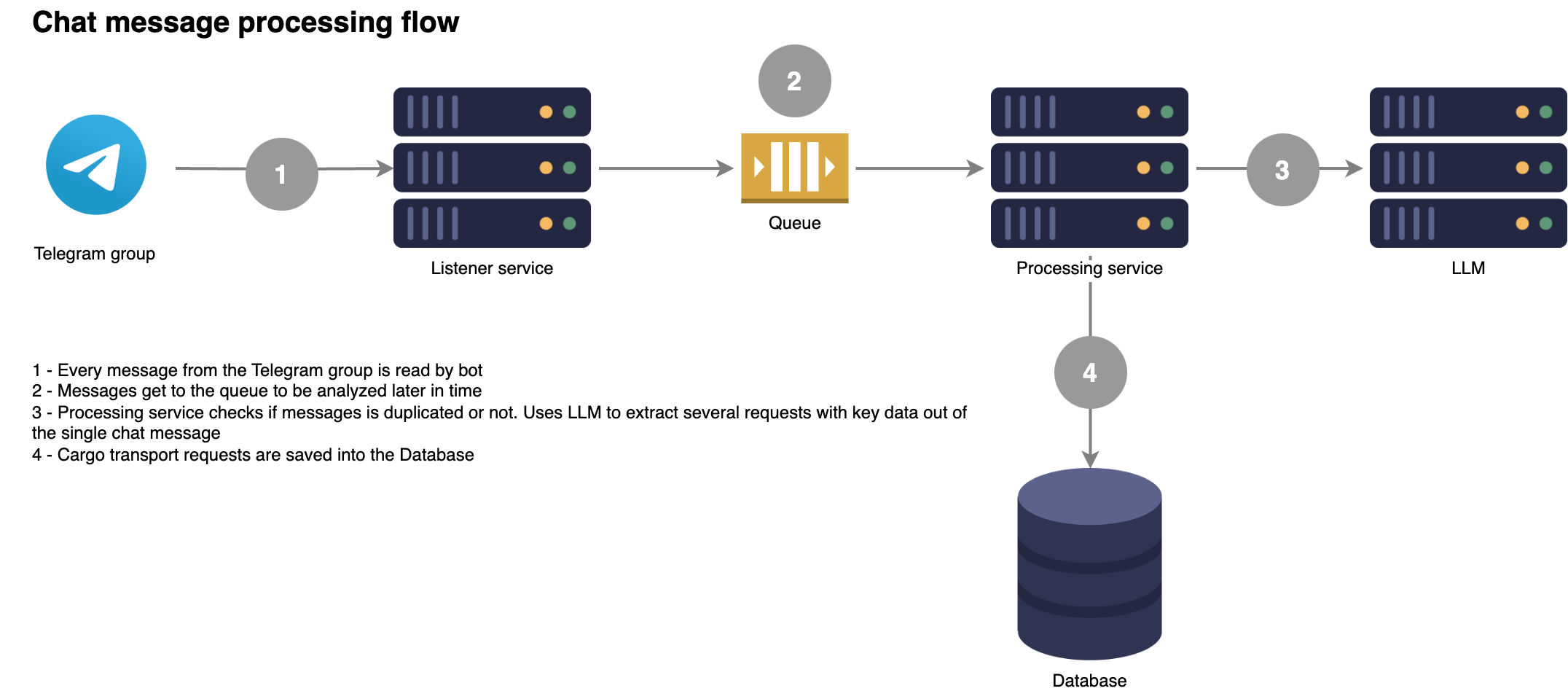

Our solution automates the extraction of cargo transportation requests from Telegram group chats, turning them into organized entries in a database for easy searching. Implemented as a Telegram bot with administrator privileges, the system efficiently manages incoming messages.

The bot actively listens in the chat, continuously checking for new transportation requests. When a new request is detected, it's queued for processing to ensure handling of data flow.

To maintain data accuracy and avoid duplication, the system first checks the database for similar requests logged within the past 24 hours. If no recent record is found, the message is sent for further analysis.

A key part of our solution is using a local Large Language Model (LLM) optimized on Nvidia RTX hardware. This setup allows us to extract relevant information from messages, producing JSON outputs that organize cargo transportation details.

We use multi-shot extraction prompts tailored for comprehensive data retrieval. These prompts are regularly refined based on insights from logs, ensuring precise extraction even from complex messages.

Choosing a local LLM solution over external services like OpenAI ChatGPT or Anthropic Claude 3 was driven by its cost-effectiveness and better performance on our own hardware over time.

In summary, our solution combines effective message handling with advanced AI-driven data extraction, enabling efficient processing of high message volumes with accuracy while minimizing operational costs.

Key Features

- Processing up to 4000 messages daily

- Usage of a cost-effective local LLM solution

- Telegram bot integration for seamless operation

Illustration

Result

Thanks to the great cognitive abilities of LLM models and good architecture the team was able to:

- Extract a list of transportation requests from messages different by form;

- Process a large number of messages per day (3000-4000) in a flexible manner;

- Significantly save budget when working with a local model.