Challenges

The challenge of the project was to deliver properly documented code sources as soon as possible. In doing so, it was necessary to take several conditions into account:

- Java, Kotlin, React (Typescript) files were supposed to be documented according to industry standards: JavaDoc and JSDoc.

- The entire codebase contained around 3200 files.

- While code sources were halfway documented (only complicated parts), the new requirement was quite strict — the whole codebase must be documented according to standards.

- A little over 2 months of work were required to complete the task.

- The codebase must stay private.

Approach

To overcome all the challenges, our team decided to follow the modern AI code documentation techniques:

- We selected an open-source LLM model that was great for working with code.

- Next, we experimented with some sample automatic documentation of code using the chosen model.

- After that, we evaluated results, as well as measured quality.

- Following that, we rented a hosting with a powerful GPU.

- Then, we created an application that performed the documentation of files and tested it.

- Finally, we ran the application by processing the whole codebase.

Solution Overview

First of all, we performed sample automatic code documentation using six open-source models: Gemma3, Deepseek-r1, Phi4, Llama3.3, Codellama, and Qwen 2.5 coder. Comparing all representatives, we decided to opt for Qwen 2.5 coder 32B.

Soon after, we selected a GPU hosting provider. Running inference on the full-size Qwen 2.5 model required a lot of video card memory (about 65 GB).

The Nvidia H100, with its 80GB of available memory, put up with the task pretty well, achieving a generation speed of around 30–50 tokens per second.

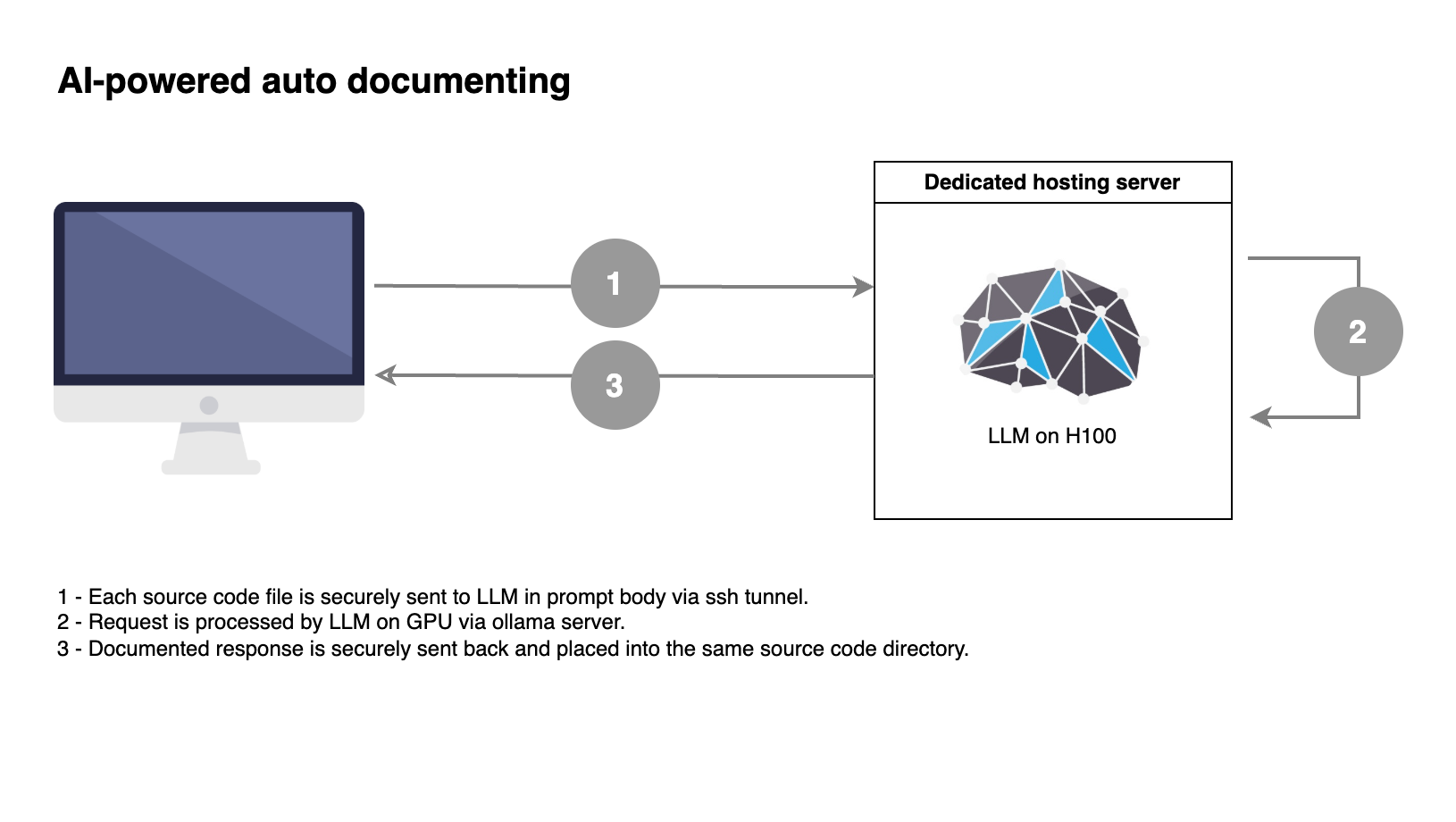

In the next phase, we created an application that communicated with the ollama server to perform modifications in the requested source code file.

Also, we formed several prompts that worked great with Java, Kotlin, and TypeScript files. The application logged every attempt, provided statistics, and operated in a fault-tolerant manner.

Fourth, we executed the main run. The LLM for code documentation took about 10 hours to process the entire code base. We performed some parts of the run in parallel on two H100-powered stations.

But before that, we ensured the transport channel was secure and that the remote system didn’t store any logs.

Key Features

- AI-driven automatic documentation of Java/Kotlin/TypeScript source codes

- LLM model used: Qwen 2.5 coder 32B served by Ollama using H100 GPU

- 3000+ files documented in ~10 hours

- Hosting budget: $25

Result

By applying an AI-powered approach and the latest automatic code documentation tools, the team achieved the following results:

- Rapidly documented the whole source code base using LLM-as-a-service.

- Delivered secure execution through dedicated GPU hosting.

- Slashed documentation costs by up to 30 times compared to manual actions.

- Created a high-quality code base description using JavaDoc/JSDoc standards.

Related Cases

- LLM

- Python

- LangChain

- React Native

- AI